Standard normal variate correction details

This mathematics operation was originally invented to reduce spectral noise and eliminate background effects of NIR data. From NIR technique non-specific scattering of radiation at the surface of particles, variable spectral path length through the sample and chemical composition of the sample typically cause baseline shifting or tilting. The influence is larger at longer wavelengths. Such multiplicative interference of scatter and particle size can be eliminated or minimized by applying a standard normal variate correction.

Please review the Standard Normal Variate Correction command in the Mathematics menu section for details on how to perform this operation.

In addition to the standard normal variate correction very often a detrending is applied in order to remove offset and tilting more thoroughly. Both operations can be applied at once using the Linear least squares Baseline Correction of the software.

Standard normal variate correction algorithm

Standard normal variate algorithm is designed to work on individual sample spectra. The transformation centres each spectrum and then scales it by its own standard deviation:

where

i = spectrum counter

j = absorbance value counter of ith spectrum

Aij (SNV) = Corrected absorbance value

Aij = measured absorbance value

xi = is the mean absorbance value of the uncorrected ith spectrum

SDev = Standard deviation of the absorbance values of ith spectrum

Spectra treated in this manner have always zero mean value and a variance equal to one and are thus independent of original absorbance values.

Standard normal variate correction example

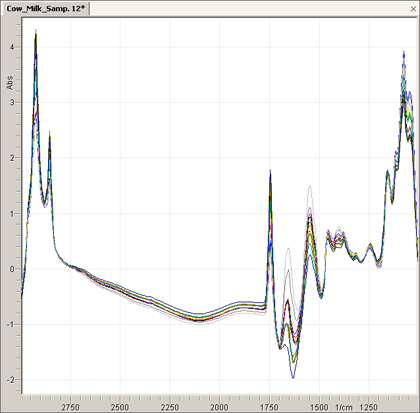

This operation is usually applied to multiple data objects. In the following example some NIR spectra of cow milk are shown in one data view:

After completion of the standard normal variate correction, spectra look like this: